Hyde Park Barracks Museum

|

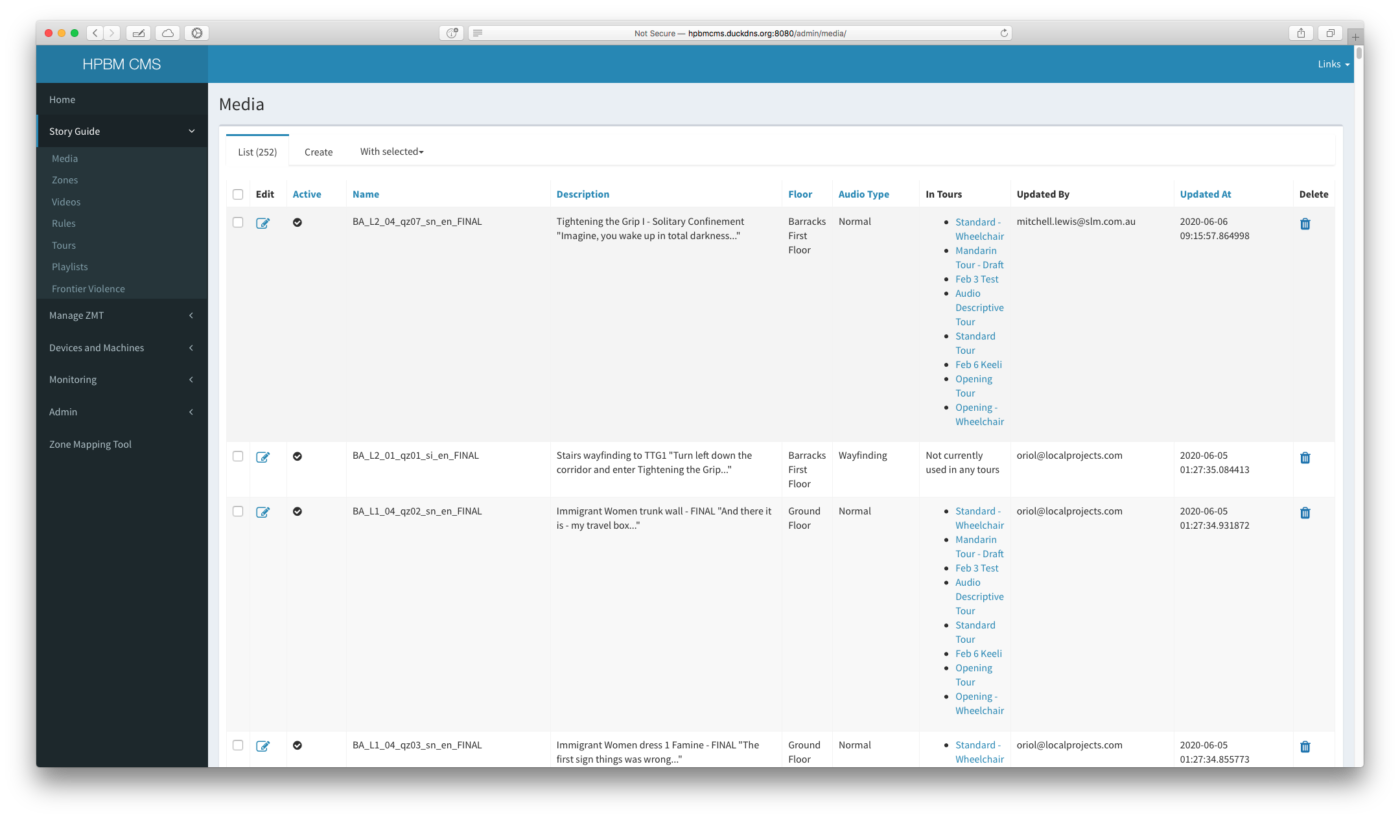

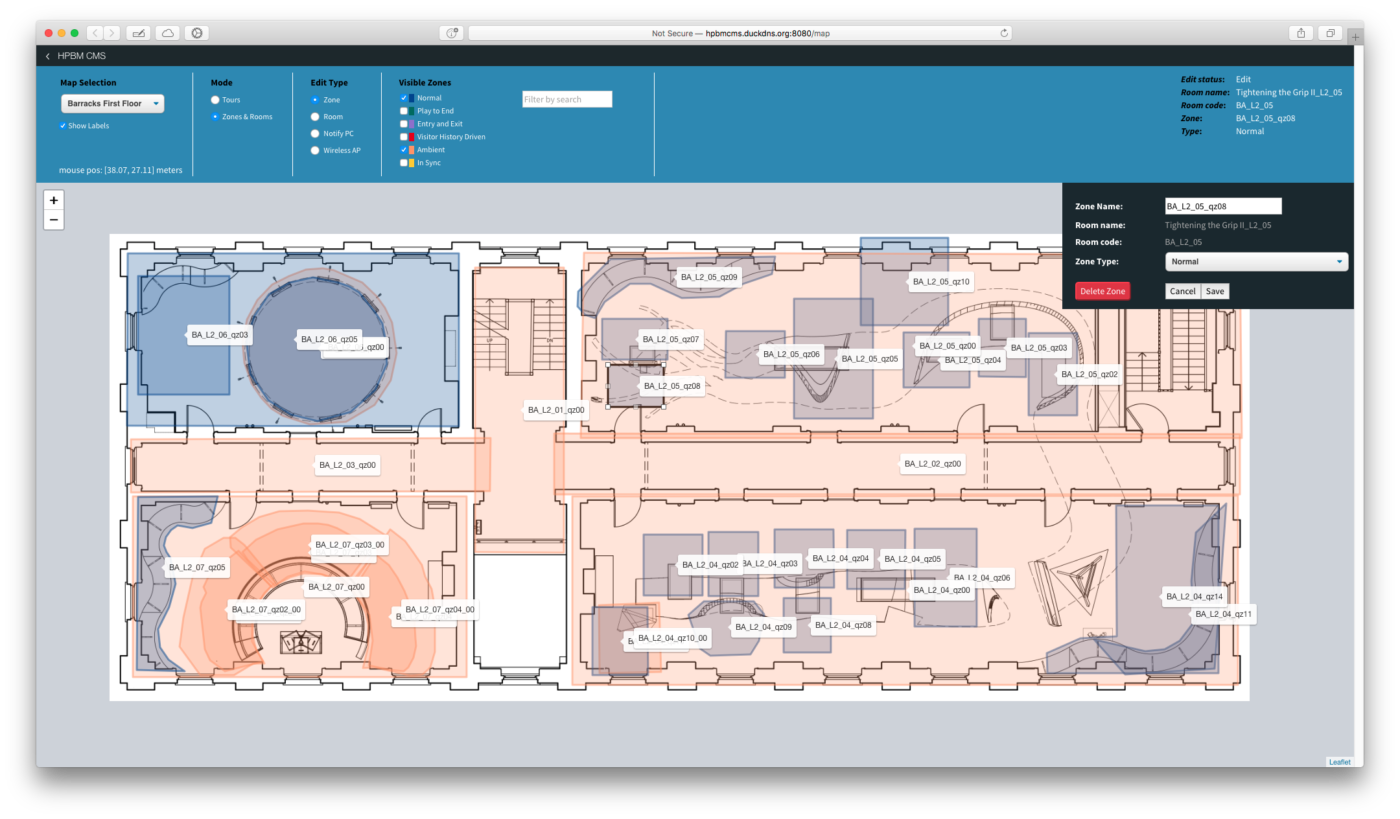

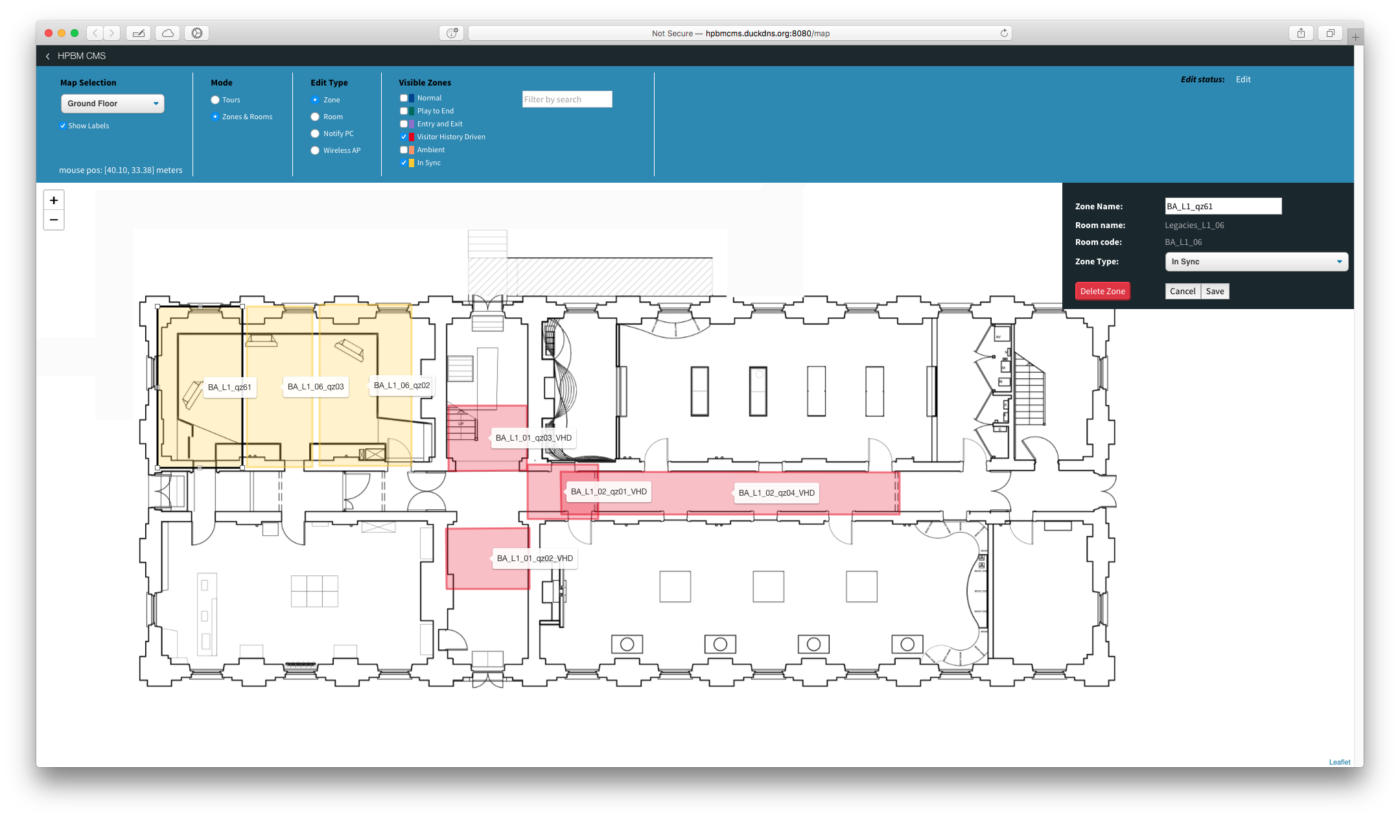

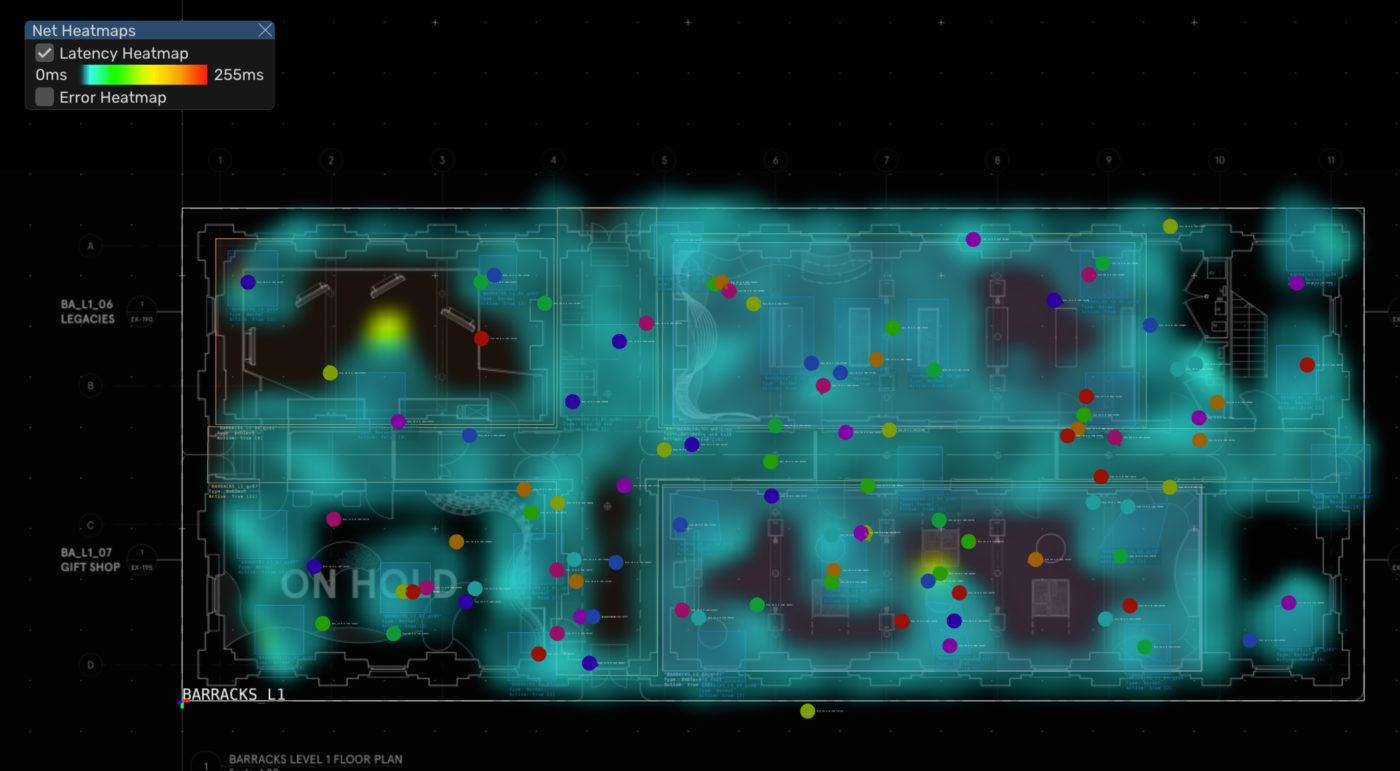

The Hyde Park Barracks Museum (part of the UNESCO’s World Heritage) partnered with Local Projects to re-launch with a new audio-based experience. I was ultimately in charge of developing all the front end software for the experience. See the Local Projects project info website. The concept was fairly simple; imagine a museum with no text. All the information is conveyed by a private tour guide, giving you contextual information based on what you are currently seeing. The whole experience is an interactive audio guide; mixing narrators and characters, giving you a story to follow as you walk the space. The experience also contains interactive screens and projections. Creating all this required the visitors to carry a small device (ipod touch) with headphones. It also requires a way for the system to know where each visitor is at all times; we used the Quuppa RTL system to be able to track visitors throughout the whole space. The StoryGuide ServerThe StoryGuide Server is an OpenFrameworks based C++ application that, at high level, keeps state for every visitor, and communicates with each visitor device (ipod) telling it what to do. It also coordinates the several computers around the space to react as design when a visitor is nearby (trigger projections, start audio-video sync, etc). It interfaces with the Quuppa tracking system and coordinates what every visitor hears at any time given where they are. To do so, it also takes in account what the visitor has experienced so far, as walking in certain areas can lead to different stops being played based on the visitor history around the site. The StoryGuide Server works in conjunction with the CMS, where museum staff can edit the tours visitors will experience. The CMS web interface allows the creation of Tours, giving the staff a floorplan interface where they can draw zones in which the visitors will hear whatever sound tracks the user desires. The CMS allows uploading and managing sound media, as well as assigning that media to zones throughout the museum. The system supports multiple languages, and its design so that an existing tour can easily be expanded with new languages by just uploading new media files.    To better design the experience, different types of sound zones exist, to cover ambient sounds, narrative sound stops, way finding sound stops, etc. Each of these zone types come with different behaviors. This allows to layer the soundscape by overlapping zones of different types across the floorplan, allowing the design any tour they desire. Visitor iPod view on top-right. StoryGuide Server view on the left. The software also allows defining custom behaviors for sound zones, by letting the user to supply json-based scripts. By doing so, a user can define exactly what a zone should do given where a visitor has been. This allows the tours to be smart about multiple-entry zones, like hallways. The first time a visitor steps on one, you can direct them one way, and direct them somewhere else when they have already visited the first destination.  Interactive ProjectionsTwo spaces required interactive projections that trigger when a visitor gets near to them. These triggers are all editable through the CMS, allowing you to modify the experience. One of the projections was 6 x 4k projection mapped pre-recorded animations that were exported as image sequences. The image sequences were too large to pre-load as they didn’t fit in GPU memory, so they had to be streamed from disk during playback. This turned out to be too slow with the amount of data we needed to play simultaneously as plain RGB textures, so I had to look into GPU texture compression; an OpenFrameworks addon was created to tackle this (ofxDXT).     edge-blended 4k projections that respond to user proximity Animation toolsThe animations shown in some of the projections are based on original illustrations from the 1800s. To help create enticing transitions we wanted to create, I wrote custom software that allowed animators to create animation masks that followed the lines from the original paintings. This software helped reduce the amount of tedious work required to create these animations. Audio-Video Sync – Legacies Room and Frontier Violence RoomA synchronization mechanism was required to play video in sync across different computers, but also to play audio in sync with the video these computers were displaying to the visitors around them. The visitor device (ipod) contains all the soundtracks the visitor may experience during the tour, so only information regarding sync is streamed to the ipods. Given that up to 400 visitors can be onsite at the same time, network architecture had to be very carefully designed to keep traffic at a minimum. The system is designed in such a way that the sync master is always the computer driving the video content, any other devices must adapt their playback rate to match it. Network latency proofed to be a big factor in keeping sync, so the system actively measures the latency between two parties and takes it in account when syncing them.   between each screen and the visitor device. The StoryGuide server plays a big role in this; when a visitor is within an area of influence of a sync-enabled player, it notifies such player that a visitor device is in its vicinity. This in turn makes the video player (sync master) start measuring the network latency between itself and the visitor device that is nearing it through a series of ping-reply packets. Once it learns about this latency, it informs the visitor device about it, together with playhead and mediaId information so that the visitor device (ipod) can start syncing up with the video player even before the visitor is near the screen. By the time the visitor is in a position to see the video content, the the audio that will start playing is already in sync with the video on the screen, achieving this seamless sync.   as three computers are required to playback the 9 x 4k video content The network latency measurement is kept going as long as the visitor is inside the area of influence of the video player, to adapt to any ongoing changes on the network latency. Once the visitor leaves the area, the video player is informed about it, and ceases to measure network latency and send sync information to the visitor device. The Visitor Device iOS AppThe museum visitors carry with them an iPod Touch with a pair of headphones that runs custom software for the experience. This application mostly has no user interface, it only briefly shows a minimal GUI for the staff members to pick a tour for each visitor.          Coverage / AwardsAustralian Museums And Galleries National Awards TeamCreative Direction: Elle Barriga and Keeli Shaw |